The International AI Safety Report

Reflections and key points from “The International Scientific Report on the Safety of Advanced AI”, January 2025.

AI’s Risks & “Immense Potential”

President of the EU Commission, Ursula von der Leyen, announced at the AI Action Summit in Paris on February 11, the launch of InvestAI, an initiative aimed at mobilizing €200 billion for investment in AI, including a new European fund of €20 billion for AI gigafactories. In a separate announcement, French President Emmanuel Macron pledged a €109 billion plan to boost AI in France.

Incredible news! The EU's massive investment in AI should make it competitive with the business tycoons across the Atlantic Ocean. At the same time, as many of my readers know too well, advanced AI comes with unprecedented risks.

If AI is advanced at all costs without proper consideration of its wider impacts on society, the tech movement could end up causing a lot more harm than good. Some profit-seeking companies would like to ignore these risks to reach their quarterly earnings targets but civic society should hold them accountable.

The first-ever International AI Safety Report was published in connection with the AI Action Summit in Paris. It’s written by a diverse group of 96 independent AI experts, including an international Expert Advisory Panel nominated by 30 countries along with representatives from OECD, the EU, and the UN. The International AI Safety Report focuses on “general purpose AI” and aims to provide scientific information that will support policymaking without recommending any specific policies.

It classifies general-purpose AI risks into three categories with a number of sub-categories:

Malicious use risks

Harms to individuals through fake content: Non-consensual pornography and other harmful applications of deepfakes, financial fraud through voice impersonation, blackmailing, sabotage of personal and professional reputations, and psychological abuse.

Manipulation of public opinion: Because general-purpose AI systems make it easier to create persuasive content at scale, they can be used to manipulate public opinion and for instance affect political outcomes.

Cyber offence: General-purpose AI systems make it easier and faster for malicious actors of varying skill levels to conduct cyberattacks.

Biological and chemical attacks: Recent general-purpose AI systems have displayed some ability to provide instructions and troubleshooting guidance for reproducing known biological and chemical weapons and to facilitate the design of novel toxic compounds.

Risks from malfunctions

Reliability issues: Unreliability can lead to harm. For example, if a user consults a general-purpose AI system for medical or legal advice.

Bias: General-purpose AI systems can amplify social and political biases with respect to race, gender, culture, age, disability, political opinion, or other aspects of human identity. This can lead to discriminatory outcomes including unequal resource allocation, reinforcement of stereotypes, and systematic neglect of underrepresented groups or viewpoints.

Loss of control: Hypothetic future scenarios where general-purpose AI systems come to operate outside of anyone's control.

Systemic risks

Labor market risks: General-purpose AI systems have the potential to automate a very wide range of tasks, which could have a significant effect on the labor market and lead many people to lose their current jobs.

Global AI and R&D divide: AI research and development (R&D) is currently concentrated in a few Western countries and China. This could lead to an “AI divide” which could increase much of the world’s dependence on a small set of countries and contribute to global inequality.

Market concentration and single points of failure: If organizations across critical sectors such as finance or healthcare, all rely on a small number of general-purpose AI systems, societies are left very vulnerable if a bug or vulnerability infects one of those systems.

Environmental risks: General purpose AI development and deployment has rapidly increased the amounts of energy, water, and raw material consumed in building and operating the necessary compute infrastructure.

Privacy risks: Sensitive information in training data or user interaction with a general-purpose AI system can leak, and malicious actors may gain access to it.

Copyright infringements: Both data collection and content generation challenge copyright law across jurisdictions. The legal uncertainty around data collection practices, causes AI companies to share less information about the data they use which makes third-party AI safety research harder.

None of these AI risks are surprising to regular readers of Futuristic Lawyer - I have covered all of them before. However, as the report acknowledges, risks related to general-purpose AI systems are very hard to fully account for. For a couple of reasons:

the range of possible use cases for general-purpose AI systems is very broad.

developers still understand little about how the models actually operate once deployed.

AI’s constantly increasing capabilities and new developments such as agentic systems and reasoning models pose new, significant risks.

governments and non-industry researchers have limited insight into how AI systems work due to the closedness of the leading AI companies.

the companies themselves face strong competitive pressure which may lead them to deprioritize risk management.

Due to the difficulty of identifying and assessing risks related to general-purpose AI, there is not any standardized risk management framework widely in use across sectors and jurisdictions – although numerous efforts are underway globally. Perhaps that is why the report’s conclusion after 213 pages is not particularly uplifting:

“The first International AI Safety Report finds that the future of general-purpose AI is remarkably uncertain.”

Concurrently, the report’s conclusion states:

“General-purpose AI has immense potential for education, medical applications, research advances in fields such as chemistry, biology, or physics, and generally increased prosperity thanks to AI-enabled innovation. If managed properly, general-purpose AI systems could substantially improve the lives of people worldwide.”

Is this really true though? Does general-purpose AI have “immense potential”?

I will challenge the notion that experts in AI and machine learning have the skills or necessary knowledge about education, medicine, chemistry, biology, or physics to make such statements.

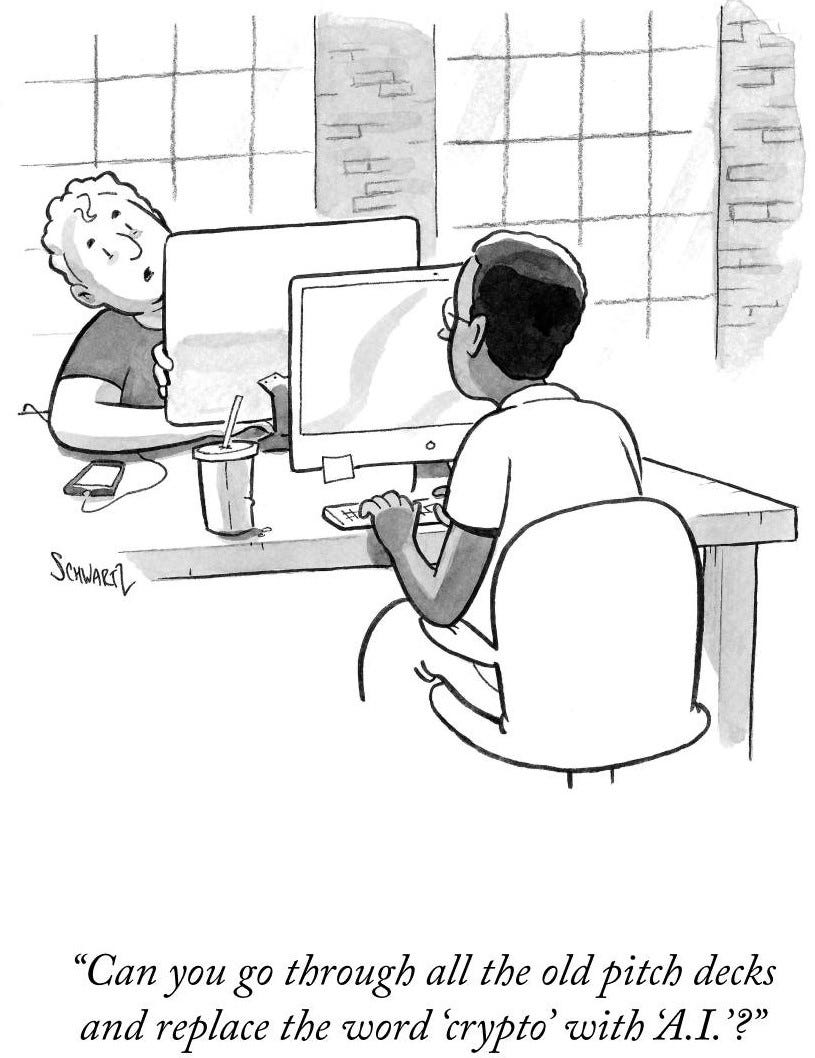

“AI will have a profound impact on society” is one of those mottos people involved in the industry have been saying for a long time, long before ChatGPT’s release in November 2022 which marked general-purpose AI’s commercial breakthrough. The same exact phrase must have been written in the conclusions of hundreds, if not thousands of reports and papers about AI - and it could just as easily have been written about crypto, blockchain, and virtual reality a few years ago.

My question: Do we in fact know that general-purpose AI’s potential is immense? What if its potential has already been reached? I personally think there is a non-negligible chance that we will only see incremental improvements to new general-purpose AI models from now on. Like the meme that each year the new version of the iPhone is released with just a slightly better camera. What else can general-purpose AI do from here on out that we haven’t already seen? Solve climate change or come up with a cure for cancer?

Of course, the field will continue to evolve and mature but let’s not discount the simple fact that the outputs of general-purpose AI are still based on the input data we provide it with. General-purpose AI models may appear smart but they cannot think for themselves and lack human qualities, completely. They are only automating knowledge we already have – which is indeed impressive – but nothing new by now.

Can we confidently say that the future potential is still “immense”? I am not so convinced but try to change my mind if you can.

Keep reading with a 7-day free trial

Subscribe to Futuristic Lawyer to keep reading this post and get 7 days of free access to the full post archives.